如何在Android中建立Google Lens應用程式?

從照片或影片流中提取和分析文字的能力是一項寶貴的技能,可以顯著提高Android應用程式的功能和使用者體驗。Google Lens是Google開發的一項先進的影像識別和增強現實技術,就是一個著名的例子。雖然複製Google Lens的全部功能是一項艱鉅的任務,但我們可以研究一個更簡單的實現,該實現使用Google Vision API在Android應用程式中專注於文字識別。

使用的方法

在Android中使用Google Vision API進行文字識別

在Android中使用Google Vision API進行文字識別

演算法

步驟1 - 設定佈局。

設計活動佈局。建立一個包含用於相機預覽的SurfaceView和用於顯示識別文字的TextView的佈局檔案。

步驟2 - 建立活動。

建立一個新的活動類;我們將其命名為GoogleLensActivity。

在onCreate()方法中,初始化相機並在SurfaceView上啟動相機預覽。

設定相機回撥,以便在相機預覽可用時捕獲影像。

步驟3 - 顯示識別的文字。

實現文字識別器。Processor介面處理文字識別過程。

在TextRecognizer.Processor的receiveDetections()方法中,使用從相機影像中識別的文字更新識別的文字TextView。

步驟4 - 更新佈局和清單。

更新佈局XML檔案以包含必要的檢視(SurfaceView和TextView)。

嚮應用程式的AndroidManifest.xml檔案中新增相機和網際網路許可權。

XML程式碼

<!-- below line is use to add camera feature in our app --> <uses-feature android:name="android.hardware.camera" android:required="true" /> <!-- permission for internet --> <uses-permission android:name="android.permission.INTERNET" />

<meta-data android:name="com.google.firebase.ml.vision.DEPENDENCIES" android:value="label" />

XML程式

<?xml version="1.0" encoding="utf-8"?> <manifest xmlns:android="http://schemas.android.com/apk/res/android" package="com.example.googlelens"> <!-- below line is use to add camera feature in our app --> <uses-feature android:name="android.hardware.camera" android:required="true" /> <!-- permission for internet --> <uses-permission android:name="android.permission.INTERNET" /> <application android:allowBackup="true" android:icon="@mipmap/ic_launcher" android:label="@string/app_name" android:roundIcon="@mipmap/ic_launcher_round" android:supportsRtl="true" android:theme="@style/Theme.GoogleLens"> <activity android:name=".MainActivity"> <intent-filter> <action android:name="android.intent.action.MAIN" /> <category android:name="android.intent.category.LAUNCHER" /> </intent-filter> </activity> <meta-data android:name="com.google.firebase.ml.vision.DEPENDENCIES" android:value="label" /> </application> </manifest>

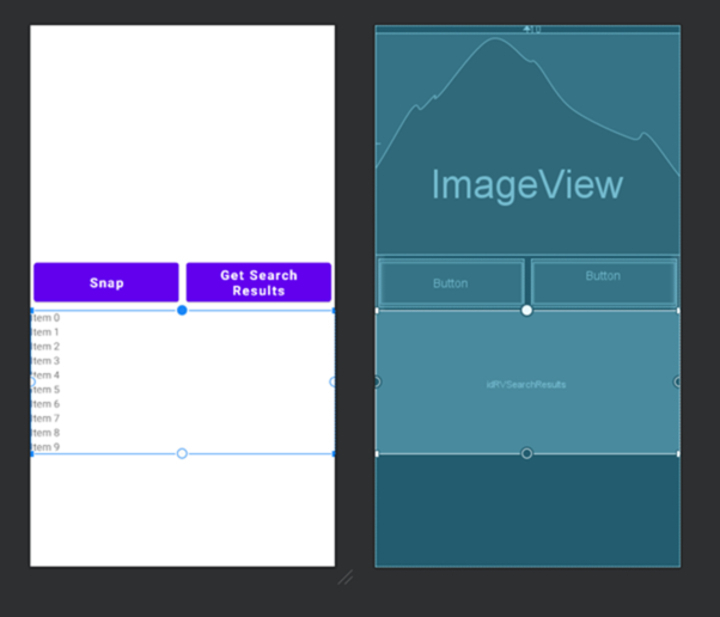

XML程式

<?xml version="1.0" encoding="utf-8"?>

<RelativeLayout

xmlns:android="http://schemas.android.com/apk/res/android"

xmlns:tools="http://schemas.android.com/tools"

android:layout_width="match_parent"

android:layout_height="match_parent"

tools:context=".MainActivity">

<!--image view for displaying our image-->

<ImageView

android:id="@+id/image"

android:layout_width="match_parent"

android:layout_height="300dp"

android:layout_alignParentTop="true"

android:layout_centerHorizontal="true"

android:layout_marginTop="10dp"

android:scaleType="centerCrop" />

<LinearLayout

android:id="@+id/idLLButtons"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_below="@id/image"

android:orientation="horizontal">

<!--button for capturing our image-->

<Button

android:id="@+id/snapbtn"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_gravity="center"

android:layout_margin="5dp"

android:layout_marginTop="30dp"

android:layout_weight="1"

android:lines="2"

android:text="Snap"

android:textAllCaps="false"

android:textSize="18sp"

android:textStyle="bold" />

<!--button for detecting the objects-->

<Button

android:id="@+id/idBtnSearchResuts"

android:layout_width="0dp"

android:layout_height="wrap_content"

android:layout_gravity="center"

android:layout_margin="5dp"

android:layout_marginTop="30dp"

android:layout_weight="1"

android:lines="2"

android:text="Get Search Results"

android:textAllCaps="false"

android:textSize="18sp"

android:textStyle="bold" />

</LinearLayout>

<!--recycler view for displaying the list of result-->

<androidx.recyclerview.widget.RecyclerView

android:id="@+id/idRVSearchResults"

android:layout_width="match_parent"

android:layout_height="wrap_content"

android:layout_below="@id/idLLButtons" />

</RelativeLayout>

Java程式

import android.content.Intent;

import android.graphics.Bitmap;

import android.os.Bundle;

import android.provider.MediaStore;

import android.view.View;

import android.widget.Button;

import android.widget.ImageView;

import android.widget.Toast;

import androidx.annotation.NonNull;

import androidx.appcompat.app.AppCompatActivity;

import androidx.recyclerview.widget.LinearLayoutManager;

import androidx.recyclerview.widget.RecyclerView;

import com.android.volley.Request;

import com.android.volley.RequestQueue;

import com.android.volley.Response;

import com.android.volley.VolleyError;

import com.android.volley.toolbox.JsonObjectRequest;

import com.android.volley.toolbox.Volley;

import com.google.android.gms.tasks.OnFailureListener;

import com.google.android.gms.tasks.OnSuccessListener;

import com.google.firebase.ml.vision.FirebaseVision;

import com.google.firebase.ml.vision.common.FirebaseVisionImage;

import com.google.firebase.ml.vision.label.FirebaseVisionImageLabel;

import com.google.firebase.ml.vision.label.FirebaseVisionImageLabeler;

import org.json.JSONArray;

import org.json.JSONException;

import org.json.JSONObject;

import java.util.ArrayList;

import java.util.List;

public class MainActivity extends AppCompatActivity {

static final int REQUEST_IMAGE_CAPTURE = 1;

// variables for our image view, image bitmap,

// buttons, recycler view, adapter and array list.

private ImageView img;

private Button snap, searchResultsBtn;

private Bitmap imageBitmap;

private RecyclerView resultRV;

private SearchResultsRVAdapter searchResultsRVAdapter;

private ArrayList<DataModal> dataModalArrayList;

private String title, link, displayed_link, snippet;

@Override

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

setContentView(R.layout.activity_main);

// initializing all our variables for views

img = (ImageView) findViewById(R.id.image);

snap = (Button) findViewById(R.id.snapbtn);

searchResultsBtn = findViewById(R.id.idBtnSearchResuts);

resultRV = findViewById(R.id.idRVSearchResults);

// initializing our array list

dataModalArrayList = new ArrayList<>();

// initializing our adapter class.

searchResultsRVAdapter = new SearchResultsRVAdapter(dataModalArrayList, MainActivity.this);

// layout manager for our recycler view.

LinearLayoutManager manager = new LinearLayoutManager(MainActivity.this, LinearLayoutManager.HORIZONTAL, false);

// on below line we are setting layout manager

// and adapter to our recycler view.

resultRV.setLayoutManager(manager);

resultRV.setAdapter(searchResultsRVAdapter);

// adding on click listener for our snap button.

snap.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

// calling a method to capture an image.

dispatchTakePictureIntent();

}

});

// adding on click listener for our button to search data.

searchResultsBtn.setOnClickListener(new View.OnClickListener() {

@Override

public void onClick(View v) {

// calling a method to get search results.

getResults();

}

});

}

private void getResults() {

// inside the label image method we are calling a firebase vision image

// and passing our image bitmap to it.

FirebaseVisionImage image = FirebaseVisionImage.fromBitmap(imageBitmap);

// on below line we are creating a labeler for our image bitmap and

// creating a variable for our firebase vision image labeler.

FirebaseVisionImageLabeler labeler = FirebaseVision.getInstance().getOnDeviceImageLabeler();

// calling a method to process an image and adding on success listener method to it.

labeler.processImage(image).addOnSuccessListener(new OnSuccessListener<List<FirebaseVisionImageLabel>>() {

@Override

public void onSuccess(List<FirebaseVisionImageLabel> firebaseVisionImageLabels) {

String searchQuery = firebaseVisionImageLabels.get(0).getText();

searchData(searchQuery);

}

}).addOnFailureListener(new OnFailureListener() {

@Override

public void onFailure(@NonNull Exception e) {

// displaying error message.

Toast.makeText(MainActivity.this, "Fail to detect image..", Toast.LENGTH_SHORT).show();

}

});

}

private void searchData(String searchQuery) {

String apiKey = "Enter your API key here";

String url = "https://serpapi.com/search.json?q=" + searchQuery.trim() + "&location=Delhi,India&hl=en&gl=us&google_domain=google.com&api_key=" + apiKey;

// creating a new variable for our request queue

RequestQueue queue = Volley.newRequestQueue(MainActivity.this);

JsonObjectRequest jsonObjectRequest = new JsonObjectRequest(Request.Method.GET, url, null, new Response.Listener<JSONObject>() {

@Override

public void onResponse(JSONObject response) {

try {

// on below line we are extracting data from our json.

JSONArray organicResultsArray = response.getJSONArray("organic_results");

for (int i = 0; i < organicResultsArray.length(); i++) {

JSONObject organicObj = organicResultsArray.getJSONObject(i);

if (organicObj.has("title")) {

title = organicObj.getString("title");

}

if (organicObj.has("link")) {

link = organicObj.getString("link");

}

if (organicObj.has("displayed_link")) {

displayed_link = organicObj.getString("displayed_link");

}

if (organicObj.has("snippet")) {

snippet = organicObj.getString("snippet");

}

// on below line we are adding data to our array list.

dataModalArrayList.add(new DataModal(title, link, displayed_link, snippet));

}

// notifying our adapter class

// on data change in array list.

searchResultsRVAdapter.notifyDataSetChanged();

} catch (JSONException e) {

e.printStackTrace();

}

}

}, new Response.ErrorListener() {

@Override

public void onErrorResponse(VolleyError error) {

// displaying error message.

Toast.makeText(MainActivity.this, "No Result found for the search query..", Toast.LENGTH_SHORT).show();

}

});

// adding json object request to our queue.

queue.add(jsonObjectRequest);

}

// method to capture image.

private void dispatchTakePictureIntent() {

// inside this method we are calling an implicit intent to capture an image.

Intent takePictureIntent = new Intent(MediaStore.ACTION_IMAGE_CAPTURE);

if (takePictureIntent.resolveActivity(getPackageManager()) != null) {

// calling a start activity for result when image is captured.

startActivityForResult(takePictureIntent, REQUEST_IMAGE_CAPTURE);

}

}

@Override

protected void onActivityResult(int requestCode, int resultCode, Intent data) {

super.onActivityResult(requestCode, resultCode, data);

// inside on activity result method we are

// setting our image to our image view from bitmap.

if (requestCode == REQUEST_IMAGE_CAPTURE && resultCode == RESULT_OK) {

Bundle extras = data.getExtras();

imageBitmap = (Bitmap) extras.get("data");

// on below line we are setting our

// bitmap to our image view.

img.setImageBitmap(imageBitmap);

}

}

}

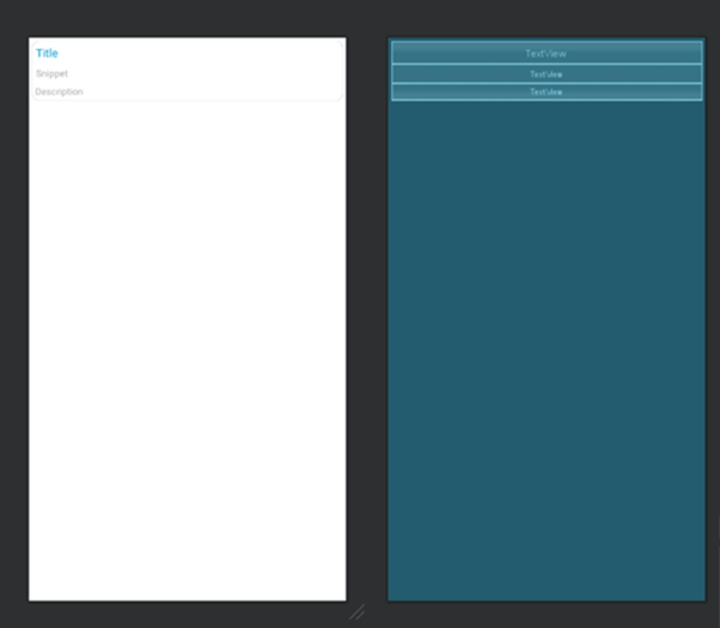

輸出

結論

本文旨在介紹如何使用Google Vision API進行內容識別,並根據您的特定應用程式需求提供大量的修改和擴充套件空間。您可以透過新增諸如語言翻譯、實體識別和問題識別等功能來增強其實用性。文字識別提供了無限的可能性,使您可以建立能夠從影像中提取和翻譯內容、輔助視力障礙使用者、自動化資料輸入等等的應用程式。

資料結構

資料結構 網路

網路 關係資料庫管理系統 (RDBMS)

關係資料庫管理系統 (RDBMS) 作業系統

作業系統 Java

Java iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C語言程式設計

C語言程式設計 C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP