使用神經網路的手寫數字識別

介紹

手寫數字識別是影像識別的一部分,廣泛應用於深度學習中的計算機視覺。影像識別是深度學習中每個與影像或影片相關的任務中最基本和初步的階段之一。本文概述了手寫數字識別以及如何將影像識別擴充套件到多類別分類。

在繼續之前,讓我們瞭解二元影像分類和多類別影像分類之間的區別。

二元影像分類

在二元影像分類中,模型有兩個類別可供預測。例如,貓和狗的分類。

多類別影像分類

在多類別影像分類中,模型有兩個以上類別可供預測。例如,在FashionMNIST或手寫數字識別中,我們有10個類別可供預測。

手寫數字識別

這項任務是多類別影像分類的一個案例,其中模型預測輸入影像屬於0到9中的一個數字。

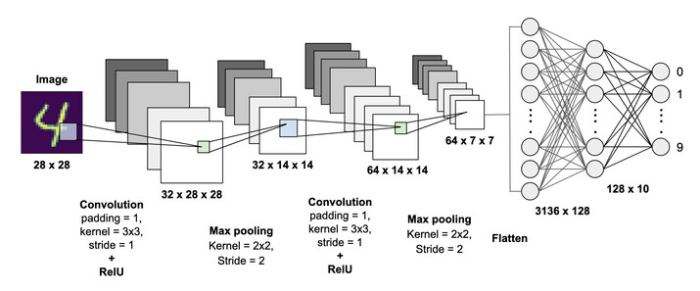

在MNIST數字識別任務中,我們使用CNN網路開發一個模型來識別手寫數字。我們將下載MNIST資料集,該資料集包含60000張影像的訓練集和10000張影像的測試集。每張影像都被裁剪成28x28畫素,手寫數字從0到9。

使用Python實現

示例

## Digit Recognition import keras from keras.layers import Conv2D, MaxPooling2D from keras.models import Sequential from keras import backend as K from keras.datasets import mnist from keras.utils import to_categorical from keras.layers import Dense, Dropout, Flatten import matplotlib.pyplot as plt %matplotlib inline fig = plt.figure n_classes = 10 input_shape = (28, 28, 1) batch_size = 128 num_classes = 10 epochs = 10 (X_train, Y_train), (X_test, Y_test) = mnist.load_data() print("Training data shape {} , test data shape {}".format(X_train.shape, Y_train.shape)) img = X_train[1] plt.imshow(img, cmap='gray') plt.show() X_train = X_train.reshape(X_train.shape[0], 28, 28, 1) X_test = X_test.reshape(X_test.shape[0], 28, 28, 1) Y_train = to_categorical(Y_train, n_classes) Y_test = to_categorical(Y_test, n_classes) X_train = X_train.astype('float32') X_test = X_test.astype('float32') X_train /= 255 X_test /= 255 print('x_train shape:', X_train.shape) print('train samples ',X_train.shape[0],) print('test samples',X_test.shape[0]) model = Sequential() model.add(Conv2D(32, kernel_size=(3, 3),activation='relu',input_shape=input_shape)) model.add(Conv2D(64, (3, 3), activation='relu')) model.add(MaxPooling2D(pool_size=(2, 2))) model.add(Dropout(0.25)) model.add(Flatten()) model.add(Dense(256, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(num_classes, activation='softmax')) model.compile(loss=keras.losses.categorical_crossentropy,optimizer=keras.optimizers.Adadelta(),metrics=['accuracy']) history = model.fit(X_train, Y_train,batch_size=batch_size,epochs=epochs,verbose=1,validation_data=(X_test, Y_test)) output_score = model.evaluate(X_test, Y_test, verbose=0) print('Testing loss:', output_score[0]) print('Testing accuracy:', output_score[1])

輸出

Training data shape (60000, 28, 28) , test data shape (60000,)x_train shape: (60000, 28, 28, 1) train samples 60000 test samples 10000 Epoch 1/10 469/469 [==============================] - 13s 10ms/step - loss: 2.2877 - accuracy: 0.1372 - val_loss: 2.2598 - val_accuracy: 0.2177 Epoch 2/10 469/469 [==============================] - 4s 9ms/step - loss: 2.2428 - accuracy: 0.2251 - val_loss: 2.2058 - val_accuracy: 0.3345 Epoch 3/10 469/469 [==============================] - 5s 10ms/step - loss: 2.1863 - accuracy: 0.3062 - val_loss: 2.1340 - val_accuracy: 0.4703 Epoch 4/10 469/469 [==============================] - 5s 10ms/step - loss: 2.1071 - accuracy: 0.3943 - val_loss: 2.0314 - val_accuracy: 0.5834 Epoch 5/10 469/469 [==============================] - 4s 9ms/step - loss: 1.9948 - accuracy: 0.4911 - val_loss: 1.8849 - val_accuracy: 0.6767 Epoch 6/10 469/469 [==============================] - 4s 10ms/step - loss: 1.8385 - accuracy: 0.5744 - val_loss: 1.6841 - val_accuracy: 0.7461 Epoch 7/10 469/469 [==============================] - 4s 10ms/step - loss: 1.6389 - accuracy: 0.6316 - val_loss: 1.4405 - val_accuracy: 0.7825 Epoch 8/10 469/469 [==============================] - 5s 10ms/step - loss: 1.4230 - accuracy: 0.6694 - val_loss: 1.1946 - val_accuracy: 0.8078 Epoch 9/10 469/469 [==============================] - 5s 10ms/step - loss: 1.2229 - accuracy: 0.6956 - val_loss: 0.9875 - val_accuracy: 0.8234 Epoch 10/10 469/469 [==============================] - 5s 11ms/step - loss: 1.0670 - accuracy: 0.7168 - val_loss: 0.8342 - val_accuracy: 0.8353 Testing loss: 0.8342439532279968 Testing accuracy: 0.8353000283241272

結論

在本文中,我們學習瞭如何使用神經網路進行手寫數字識別。

廣告

資料結構

資料結構 網路

網路 關係資料庫管理系統 (RDBMS)

關係資料庫管理系統 (RDBMS) 作業系統

作業系統 Java

Java iOS

iOS HTML

HTML CSS

CSS Android

Android Python

Python C語言程式設計

C語言程式設計 C++

C++ C#

C# MongoDB

MongoDB MySQL

MySQL Javascript

Javascript PHP

PHP